the word “subject”, referring to a person, is fascinating.

on the one hand it means a subordinate, a “subject of” someone who rules them.

on the other hand it means a locus of agency, a subject can act, not merely be acted upon (which is the role of an “object”).

subject in contrast to object, vs subject in contrast to king.

among the most selfish, but perhaps the least self-aware person in all of human history. https://xcancel.com/elonmusk/status/1974817165283893574

we’ve redefined meritocracy as a tournament to acquire the best “cheat codes”.

i guess they’re real guys!

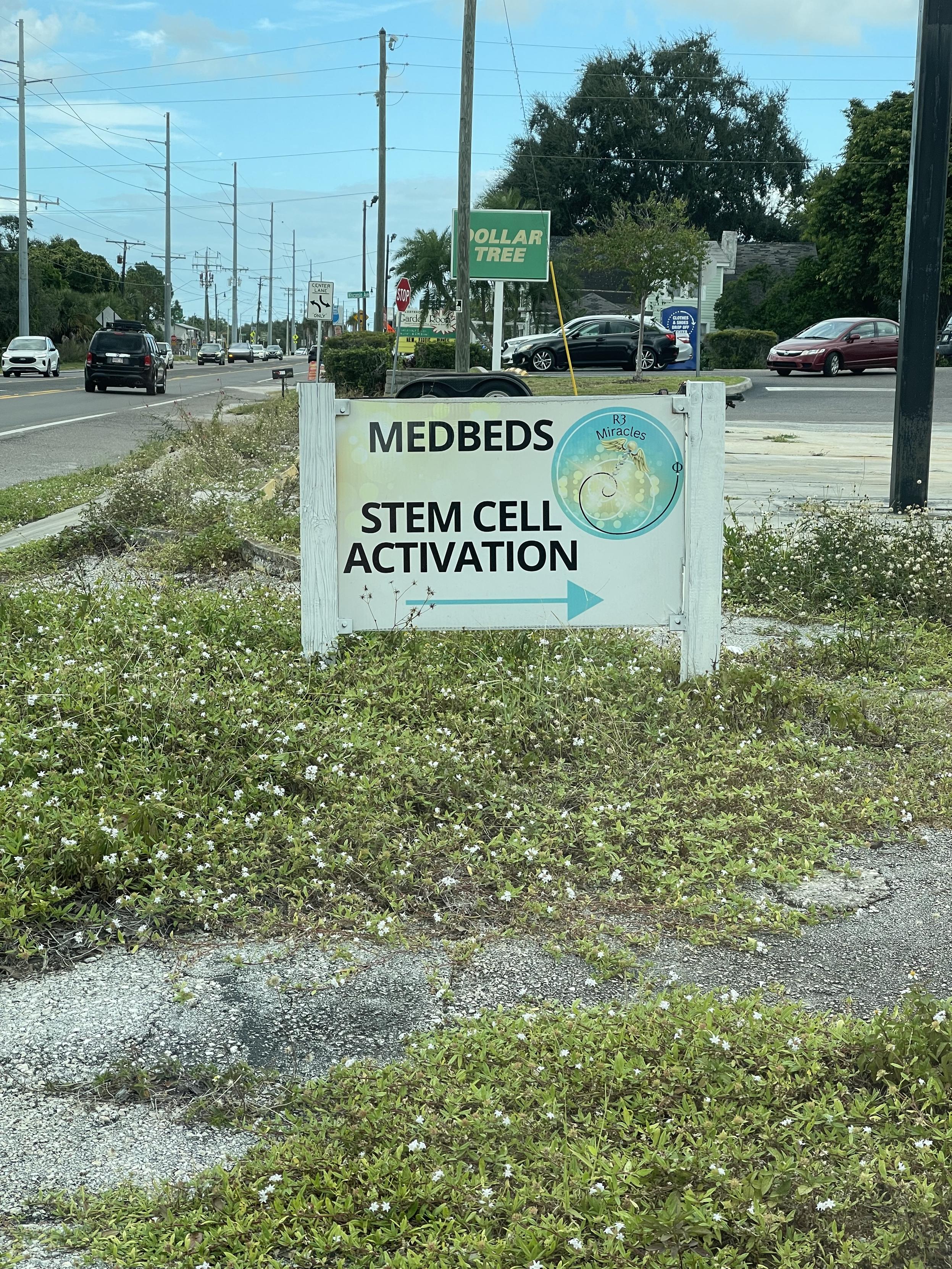

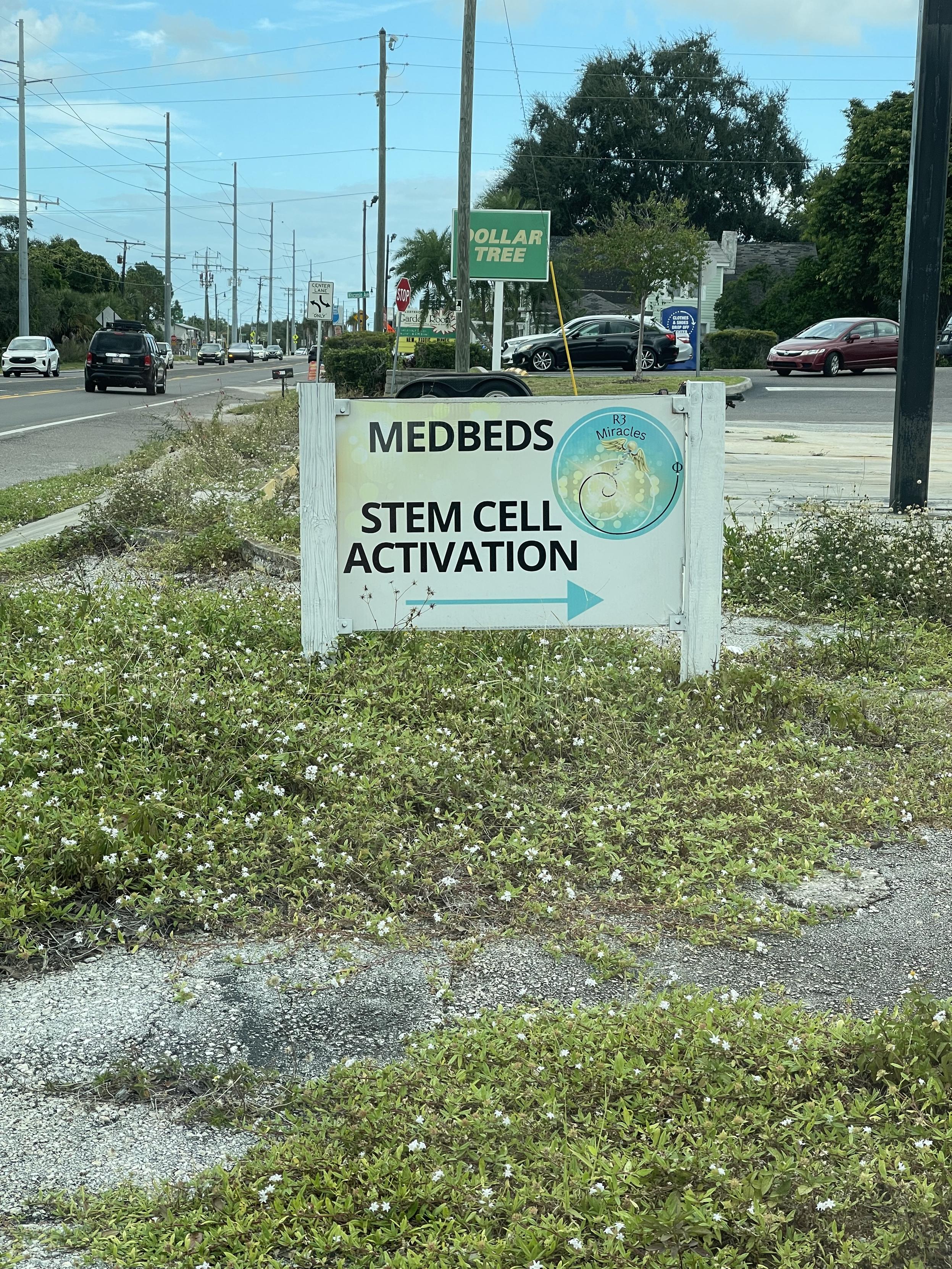

Photo of a sign advertising “medbeds” and “stem cell activation” in Pinellas County.

Photo of a sign advertising “medbeds” and “stem cell activation” in Pinellas County.

“The lesson we should be taking from LLMs is the immense social value there is in having all kinds of material – all kinds of products of human intellectual labor – freely available online. They should be reminding us of the early utopian promise of the web.” @jwmason https://jwmason.org/slackwire/actual-intelligence/

kind of ironic that they used to throw around “soy boy” as an epithet.

which is worse, to hate or to be hated?

what if there were a Halloween Everyday 🎃 app where you could post the location anytime you see anyone in a cool mask!

@isomorphismes they say it, sure.

but their behavior is all about denying anything not immediately obvious, the “show me” state taken to an extreme.

global warming? hoax. you say vaccines reduce transmission and disease severity even if you can see they do not entirely stop transmission or prevent disease? absurd. starting trade wars? only foreigners will pay. our guy ignores all legal constraints to do what he feels like? we just applaud, no risk of that ever getting turned on our asses.

1) you can just tell these people to fuck off.

2) work strategically to arrange things so you will continue to be able to just tell these people to fuck off despite the costs they will try to impose on you for doing so.

3) in any case, just tell them to fuck off.

the modern republican coalition is made up of people who are sure that any supposed connection between fuck around and find out is a fabrication of libs and pointy headed elites and the deep state.

Text: The single most important problem for pro-democracy forces is that too many people — especially in position of power — seem unable to truly believe that we are living in a consolidating competitive authoritarian regime. Perhaps they are too habituated to the "rules" of the system that no longer exists. Perhaps they still cling to the drug of American exceptionalism, which makes it difficult for them to accept that "it can happen here." Perhaps they understand it intellectually, but find it too difficult to make the necessary paradigm shift.

Text: The single most important problem for pro-democracy forces is that too many people — especially in position of power — seem unable to truly believe that we are living in a consolidating competitive authoritarian regime. Perhaps they are too habituated to the "rules" of the system that no longer exists. Perhaps they still cling to the drug of American exceptionalism, which makes it difficult for them to accept that "it can happen here." Perhaps they understand it intellectually, but find it too difficult to make the necessary paradigm shift.

one thing about wind and solar is they may be less susceptible to accident or sabotage than fossil fuel infrastructure. (but what about large-scale batteries?)

now which article of the Constitution was it that mentioned the Power to declare War? https://www.nytimes.com/2025/10/02/us/politics/trump-drug-cartels-war.html

if the president lacks the power to do a thing but he gets away with doing it anyway, what does it even mean to say he lacks the power to do the thing?

my AI company will be called Monkey’s Paw.

Text: Let me say all this again: Coase developed a world-changing idea in 1937, simply by trying to think through gaps between the idealizations of economic theory and the realities of business practice; he did not publish an articulation of that idea that 'took' until he was 50 years old; the articulation that brought his ideas to fame was only published because a group of well-resourced academics were so convinced he was wrong; for another 30 years, his ideas were put to uses almost completely contrary to those he had intended. It is only now, 15 years after his death, over 50 years since Social Cost, and 80 years after he first developed his original insight, that we can fairly say that his ideas are being put to use in earnest.

Text: Let me say all this again: Coase developed a world-changing idea in 1937, simply by trying to think through gaps between the idealizations of economic theory and the realities of business practice; he did not publish an articulation of that idea that 'took' until he was 50 years old; the articulation that brought his ideas to fame was only published because a group of well-resourced academics were so convinced he was wrong; for another 30 years, his ideas were put to uses almost completely contrary to those he had intended. It is only now, 15 years after his death, over 50 years since Social Cost, and 80 years after he first developed his original insight, that we can fairly say that his ideas are being put to use in earnest.